tl;dr: The article delves into a modernized approach to strategy deployment by treating it as a series of testable hypotheses or predictions. This perspective enables businesses to implement data-driven methods, encouraging agile adjustments and fostering a culture focused on continuous improvement and adaptive strategic planning.

Even with 20 years of experience in studying, applying, and teaching Lean principles (“Practicing Lean,” if you will), I have relatively limited experience with the “strategy deployment” methodology. I've never had the opportunity to work full-time in an organization that had a mature strategy deployment (SD) process and culture.

I have had, thankfully, the opportunity to learn from many visits to ThedaCare about how they do SD (including helping to lead the production of this DVD). I've been able to work as a consultant where I've been learning from other consultants who are more experienced with SD and I've supported ongoing SD efforts from the standpoint of coaching people on A3 thinking and Plan-Do-Study-Adjust (PDSA) problem solving.

Listen to Mark read this article (learn more and subscribe via iTunes):

Within the past year, I've had the chance to work with Karen Martin at a health system that is getting started with a brand new SD process. I am again supporting them in the areas where I have more experience: the A3 process, PDSA problem solving (Plan-Do-Study-Adjust), Kaizen, value stream mapping, and general Lean thinking.

But back to SD… one key reflection of mine on this work and learning is:

It seems to me that a strategy deployment process can be described as a series of hypotheses that are tested over time. Strategy deployment is a high-level annual PDSA cycle that contains embedded PDSA cycles of analysis, improvement, measurement, and adjustment.

An organization, whether they are practicing Lean or not, generally already has a defined mission, an articulated vision, and a set of stated values. Whether the mission, vision, or values are correct or not seems like something that can only be tested through an ongoing PDSA mindset and reflection over many years.

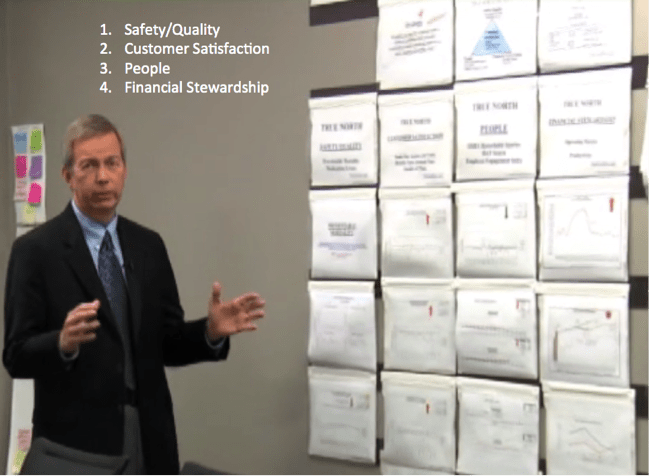

In the SD process, an organization defines four (or maybe five) key objectives and goals (called “focus areas” at ThedaCare). Pictured below is a screenshot of ThedaCare CEO Dean Gruner, MD as he talks about strategy deployment in the DVD I mentioned earlier.

Dean is standing in front of one of the walls in their executive meeting room. ThedaCare's four “true north” focus areas are:

- Safety / Quality

- Customer Satisfaction

- People

- Financial Stewardship

These four areas are important and meaningful to ThedaCare, as they might be to other hospitals (but that doesn't make these the “right” true north objectives that all must use or copy).

But this also seems to be the first hypothesis, which could be stated as:

Hypothesis #1: “If we focus our improvement efforts and close performance gaps in these four areas, we will therefore perform well as an organization, this year and over the long-term.”

At ThedaCare, the “true north” focus areas tend to stay the same each year, since they are the compass and direction for the organization. These shouldn't change every year. These should be an example of what Dr. W. Edwards Deming called “constancy of purpose.” These areas are interconnected and mutually supportive, as a hospital and or healthcare organization are a system, as Deming would have explained.

An organization could choose to change or replace a true north focus area if that first hypothesis seemed to not be working out as expected over time. It's hard to see how doing well in a focus area like “customer satisfaction” would not improve overall organizational performance. But, maybe the broader conditions have changed and the senior team decided that a different key focus area should be brought in instead.

Under each of the four focus areas are two to three key performance indicators (KPIs) that are tracked and are watched closely by the senior leadership team (SLT) on a monthly basis, if not more frequently. These specific metrics are chosen because they are the specific areas in which the organization needs to improve this year. And, breakthrough improvement projects (managed via A3s) are chosen to drive improvement in those metrics.

So that seems to be a second hypothesis:

Hypothesis #2: “If we can improve and close our performance gaps in these key performance indicators, we will satisfy our need for improvement in our key focus areas, and therefore we will be successful as an organization, overall.”

You might call these “focus metrics,” because they are providing focus to the SLT within their true north focus areas. Instead of looking at 100 measures and trying to drive improvement in all of those measures, the SD approach tells us that it's better to pay attention to a few high-leverage areas instead of spreading our attention and efforts too thin. I visited one organization a few years ago that bragged about being down to “just 37 focus metrics.” Well, I guess that's progress. As they “study and adjust” over time (PDSA) they might realize that 37 isn't really focused enough. ThedaCare has about ten of these focus metrics each year.

These KPIs or focus metrics change more often in an organization than do the true north focus areas. Under the “Safety/Quality” area, a hospital might initially measure medication errors and patient falls. But, after making big improvements in those particular KPIs, they might shift to measuring things like hospital acquired infections and overall mortality instead. Changing the KPIs after a yearly SD cycle doesn't mean that it's no longer important to prevent medication errors. It's likely still something that's being measured somewhere in the organization. But, it means that it's not one of the key indicators that the SLT needs to be looking at constantly throughout the year.

How do we close the gaps in performance? How do we ensure we have enough organizational capacity to do so?

Is your organization using strategy deployment? What hypotheses are you testing? What are the results of those tests?

Also, see this webinar that I did in July 2016:

What do you think? Please scroll down (or click) to post a comment. Or please share the post with your thoughts on LinkedIn – and follow me or connect with me there.

Did you like this post? Make sure you don't miss a post or podcast — Subscribe to get notified about posts via email daily or weekly.

Check out my latest book, The Mistakes That Make Us: Cultivating a Culture of Learning and Innovation:

Well presented. I think the challenge, at least in healthcare, is truly getting to 10 key metrics. I visited the TC measurement room a few years ago and it was plastered with metrics. I know they weren’t “focusing” on all the metrics, but nonetheless there were more than 10 if my memory serves me. Things like meaningful use and value based purchasing drive some of this.

It would be nice to see how TC takes the top-level goals down to the next and the next levels.

Good question. I’ve seen the same thing on an additional third wall at ThedaCare. I’d have to ask somebody there if those charts represent “the next level down” of metrics that roll up or contribute to the key True North metrics.

In general, I’d also ask if organizations that use charts like this apply proper SPC thinking to them or not. Do they have to explain every up and down point in the chart? Explain every point below some arbitrary goal? Mike Stoecklein from the ThedaCare Center for Healthcare Value are going to do an eBook around that topic – the smart use of statistical thinking and understanding variation for management metrics.

Hi Mark,

We’re working through our SD process at the moment, and this post is helpful.

I like your use of Hypotheses…it implies that SD should be a scientific problem solving process applied to an organization and its culture.

Seems to me that with healthcare, you have the opportunity to use some familiar analogies. Isn’t SD like patient care applied to your organization?

You could treat it like your organization coming in for a yearly checkup…

First the patient takes stock of what ails them. They reflect on last year and think about the aches and pains they want to talk to their doctor about. They also think about what changes they tried to make, and their successes and failures.

Next they visit their doctor, and have the discussion about their health. The doctor captures all of their concerns, lists them, and tries to prioritize what to work on.

Part of this process may involve some tests. The doctor has some ideas about the causes of problems, but needs more data. They are developing their hypothesis of the cause of the patients issues.

From the result of this data gathering, along with their observations and insight, they form a diagnosis. But we know that a diagnosis is rarely 100% accurate. They are solidifying their hypotheses and developing a plan to test them with treatments.

Next step is working with the patient and staff to execute the treatment plan. As a team, we conducting a series of experiments to see if our hypotheses are correct…that we have correctly diagnosed our patient, and that the common treatments will be successful.

We’ll probably plan some check-ups along the way to monitor progress on our experiments. Barring any issues that require immediate attention, we’ll plan to meet next year and do this all again.

Next year comes, and the cycle repeats. We begin the year with a reflection of the previous year. We’re analyzing the results of the experiments we conducted, and figuring out which hypotheses were proven, and which didn’t work out as we hoped. We’re also adding any new issues. We’re looking at our new years resolutions and deciding what new changes we want to accomplish this year. We’re also looking at our usual pain points, and thinking about what new approaches this year might help…where last years actions didn’t.

Hope this helps…Jeff

Thanks for the comments, Jeff. I think there are some parallels. I think there’s typically more of a “monthly checkup” with strategy deployment, along with ongoing assessment of KPIs and progress on A3s. It’s an annual cycle, but like you said, it won’t work if it’s just an annual exam or annual planning process.

It’s a hard mindset shift to go from a determinate “PLAN” (that we must execute and cannot admit “failure” on) versus a true PDSA mindset. Most execs are stepped in Plan-Do.

Dear Mark

thank you for the invaluable post. i wonder if the second part of this post is out yet in affirmative please need a link to it kindly. if not yet, i look forward to read it.

With kind regards

Anastasia Sayegh

Thanks — I still need to publish Part 2. It’s mostly written, so thanks for the reminder to finish this series. I’ll try to remember to post a link to it in the comment thread here.

Here is Part 2… sorry it took so long.

[…] “strategy deployment” from the […]

[…] NEED to solve. What’s critically important? Patient safety. Quality. What’s strategic? Strategy deployment can help frame the need for improvement and our […]