I'm not sure why “error” was put in quotes since this seems like an actual error, not a quote-unquote error:

Staff ‘error' blamed for chlorine leak at Louth's Kenwick Park Hotel which led to five being taken to hospital

When people talk about human error, they often seem to miss the point. They think calling something human error is an excuse. No.

Human error is GOING to happen, because we are fallible. That's why we need good systems, tools, and processes and we can't just ask people to “be more careful” and we can't just blame them after an error occurs.

As the article describes, a hotel employee was mixing chlorine and swimming pool chemicals something went wrong, releasing gas. This sent five people to the hospital, where all were expected to recover and be unharmed.

Speaking at around 6pm yesterday, Mr Flynn said: “A member of staff got the mixture wrong in the plant room, releasing the chemical.

“It was human error.”

It seems like a factual statement to say that the mixture was wrong… and that's about it.

Ascribing it simply to “human error” often implies “well, there's nothing more we could have done… it was human error, don't blame the management or the organization… blame that individual. We'll punish that person and move on.”

However, if we ask “why was the mixture wrong?” (and keep asking why) there are possible systemic factors including (just guessing here):

- Unlabeled or poorly-labeled containers that led to the wrong chemical being used (a system problem)

- Lack of proper tools for measuring the chemicals (a system problem)

- Poorly trained staff (a system problem)

- The person doing the work was rushed or distracted (a system problem)

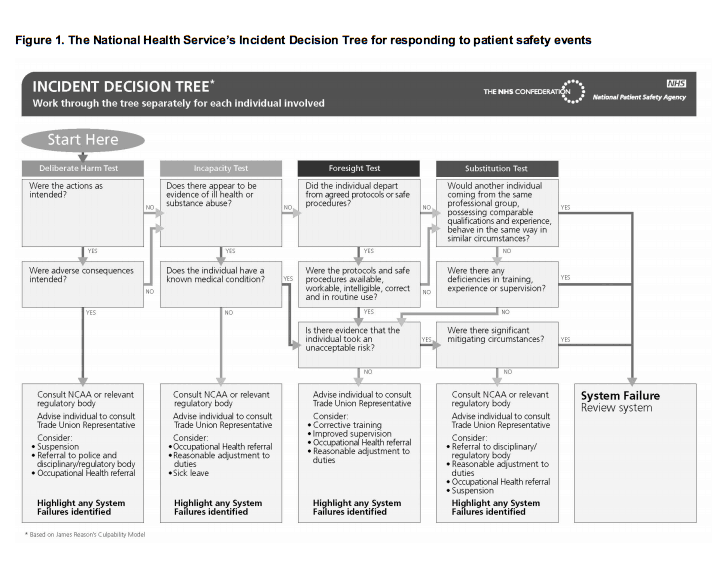

These are all system problems. The Just Culture methodology and this flowchart provide better problem solving tools than asking “who screwed up?” Click for a larger view… in most instances, the flowchart points to “system problem” and there are just a few circumstances where individual punishment is recommended.

Leaders need to be proactive to prevent errors and situations like the one described in the article.

There's another blaming statement:

“One of the leisure club operators got the mixture wrong when preparing some chemicals and there was a reaction, so we evacuated and alerted the fire brigade.”

Rarely do we hear about what the leaders and the organization did wrong. Rarely are leaders held accountable for the results of what happened as a result of the system… and top leaders are responsible for the system, as Dr. Deming so clearly pointed out.

Recently, the CEO of the South Korean company that operated the ferry that sunk and killed 188 resigned and was arrested. The boat was allegedly overloaded with cargo – something that is quite likely a system error as opposed to being one captain's fault. How did company policies, targets, training, and systems contribute to that?

Back to the hotel article:

He added there had never been an incident of this nature at the hotel before.

He said: “We have been here 19 years and this has never happened before so we have got a good record.

“The operator obviously made a mistake.

“We're all human. If you're not absolutely careful these things can happen.”

Not having an incident for a long time is NOT a good predictor of an error-free future. We see this in many well-known cases of medical error… a person with a sterling track record is involved in an accident and gets blamed, usually unfairly.

You can see the undeserved faith put into “being absolutely careful.” Things can go horribly wrong even with an absolutely careful person. As I've said before… being careful is a good start. It's helpful, but not sufficient for quality and safety.

What is your organization doing to reduce blame? Are you using “Just Culture” in healthcare or other industries? Let me know what you think by leaving a comment.

Please scroll down (or click) to post a comment. Connect with me on LinkedIn.

Let’s work together to build a culture of continuous improvement and psychological safety. If you're a leader looking to create lasting change—not just projects—I help organizations:

- Engage people at all levels in sustainable improvement

- Shift from fear of mistakes to learning from them

- Apply Lean thinking in practical, people-centered ways

Interested in coaching or a keynote talk? Let’s start a conversation.

![When Was the Last Time a Leader Around You Admitted They Were Wrong? [Poll]](https://www.leanblog.org/wp-content/uploads/2025/07/Lean-Blog-Post-Cover-Image-2025-07-01T212509.843-238x178.jpg)

Thank you for providing the information about Just Culture and the incident decision trees. I think especially such systematical system failure analysis tools can help to bring the human element of failures into the right perspective.

Blaming statements like your examples are very natural. We perceive the job world as human made, so a human must be the reason for failures. The reason for that is that most people are neither taught nor used to “thinking in systems and processes.”

In my mind, blame in the professional world is a variant of what Taiichi Ohno called “motion is not work.” When you blame (or take the blame) one can move without having to work. Nobody needs to change. The system can fall back into its assumed stable state. Or, to put same blame into this observation: it is a matter of organized laziness.

Yeah, blaming and punishing a person is “easy” and often not questioned by society. We all want quick neat and tidy answers and solutions. Saying we punished somebody lets everybody feel like they did something… when it probably wasn’t the right thing or the thing that will prevent future errors.

How do you prevent errors in mixing pool chemicals? Given that the same mix is used repeatedly, you might want to buy the mix directly rather than its components, in doses matching exactly the amount needed every day. Failing that, you could invest in an automatic dispenser/mixer.

Relying on humans to handle and manually dose dangerous chemicals on a daily basis is an accident waiting to happen. This organization was lucky not to have any in 19 years, or at least any reported.

Mark,

The importance of your point concerning reporting accidents is excellent. When operating in a blaming culture, people tend to try to cover up errors to avoid blame and other recriminations. When the culture is open to a rational assessment of the factors that contributed to the error and people do not feel threatened, they are much more likely to report safety incidents. For example, Human Factors researchers in aviation have introduced numerous corrections to processes and procedures through the analysis of incident data entered in NASA’s Aviation Safety Reporting System by pilots and other aviation professionals. By having this type of tool available to them, people can make meaningful contributions to solving safety problems.

Great post! This reminds me of an A3 I coached where the author stopped his five whys at “human error”. Asking a few more whys after this uncovered causes suich as distractions from phone calls, unclear or conflicting input information, variation of formats for information to be input, and a host of other causes.

Besides the importance of removing a blame culture, we also need to help people recognize they can influence their work instead of throwing hands up with “we are human therefore mistakes are bound to happen”. A couple of deeper whys will bring you to something you can impact.

Great point, Brian. We need to recognize that human error is inevitable. That means we need to work really hard to prevent them through process and system improvement. We can minimize mistakes or, more likely, create robust systems that minimize the impact of a human error.

To Michel’s point, buying pre-mixed solution or having better process controls would certainly help us not rely on luck.

Dr. Atul Gawande writes about this well — a main point of checklists and other process controls is to prevent a doctor from having one bad day that ruins an otherwise spotless 20 year career. They might have been lucky for 19 years and then a human error causes a major problem. They’ll likely get blamed (or the blame rolls downhill to somebody with less power). That’s why we need prevention beyond saying “be careful” and reactions beyond “you’re in trouble.”

This is a good trick: Whenever a root cause analysis comes upon “human factor” you know some more why’s are necessary.

Mark,

This is a great post and example of what is too often settled on as “root cause”. We try to teach that if “5 Whys” leads you to a person, you’ve gone down the wrong track. Just Culture is a good method to put the brakes on during an investigation, and we use it. Another tool to consider during problem solving is OSHA’s Hierarchy of Control. Michel Baudin nailed it when suggesting elimination of the risk altogether. Elimination sits at the top of OSHA’s Hierarchy. Policies and Education sit near the bottom, and yet they are too often seen as preventive action. They work, until they don’t. I’d like to use this chlorine gas example in an upcoming session.

Yes, “warnings” and caution signs are about the weakest form of error proofing and prevention. I’d agree that training, policies, and education are weak, as well.

Far too often, the supposed root cause is a person and the countermeasure is “re-training” or “more education,” if it’s not punishment. If the problem was really lack of training or poor training, the organization rarely asks why they had deficient training and education methods to begin with.

Of course, along with this post’s theme, I committed a human error last night. I accidentally published early by forgetting the change the date to May 12. So, it published immediately (as of May 11) instead of being scheduled as the next day’s post.

I immediately clawed it back to be a draft post, but automated social media messages had gone out. So, I got a message asking why I had taken the post down. So, I went ahead and published it Sunday evening instead of Monday morning.

Ironically, a preventable human error!

But in contrast to five injured people no real damage…

I made another blog error today, scheduling two posts for 5/15 at 5 am.

Oops.

I do that once every few months.

I don’t have good error proofing other than “being careful.”

So, the mistake happens. Then, I unpublish one of the posts and set it for the next day.

I wish WordPress has a feature that said “Warning: You already have a post scheduled for that day/time.” That might be helpful error proofing. Not perfect error proofing, but the warning would help, I think.

Mark, if you allow I would like to add a recent desaster to highlight that there is something even worse than blame: fatalism, especially from “top management,” when something goes wrong.

I’m sure you heard about the terrible accident in Turkey. While this BBC article is relatively neutral Turkey coal mine disaster: Desperate search at Soma pit, this one here is much more revealing: Turkey Soma Disaster: Mine Explosions ‘Normal’ Says Erdogan

Well, I’m sure there will be a large portion of blame shared as well…

If we don’t put an effort into poka-yoke the systems and designs people will continue blaming people.

[…] Blame: Human Error is Going to Happen Even If We’re Being Careful by Mark Graban. “Human error is GOING to happen, because we are fallible. That’s why we need […]

[…] From a quality and patient safety standpoint, too many healthcare organizations still rely on asking people to be careful, as if those “best efforts” are a way to prevent human error. The beatings (and blaming and punishing) will continue until quality and safety improve? Thankfully, some organizations are realizing that quality and safety must be built into the system, rather than being inspected in (or worse, “punished in” by blaming individuals who make inevitable mistakes). […]

I have worked at travel nursing for four years. If you,I’ve come to the conclusion, if a hospital is using travel nurses the hospital has a problem with technology management, and leadership as the majority do not want to work. Of course travel industry is one of recruiter who know nothing about what is going on at the hospital in question. I’ve glanced at some of these unit managers who use lean , six sig for 1 or two projects to save time, etc. For example the corporate culture is often pressured by the government for numbers with threat of fines. Resulting in a big mess felt clear down to bedside. Just got canned from an assignment with my first two overt errors. Well the hospital installed a cut up past Cerner system with partial paper and part digital system. This was, so I am told, due to impending deadline HITECH. I worked with a budgeted full Cerner system, but this looked like some kind of deformed 2008 combo with clerks using Medtech. Phones did not work. Scanners in many rooms did not work. I missed aprescription script for a discharged patient. Happned frequently. Paper scripts left around. I could go on. Just culture was not applied to this and many other hospitals. We are talking about large facilities having thousands if patient. People look at whatever makes books look good often. Big marketing used for those stats maybe just lipstick on the pig

Thanks for your comment and for sharing your story, Matt.

It sounds like you were blamed and fired for a mistake… such as missing that medication?

It doesn’t sound like a Just Culture. It doesn’t sound like that organization was setting you and others up for success.

I hope you find a better environment to work in… and best wishes to you.