As I've been re-reading Dr. Deming's Out of the Crisis, stuff like this jumps out at me more and more. I saw similar signs at Heathrow Airport last month bragging about the airport exceeding targets for customer service. This is, of course, the old “comparison to targets” approach to quality that Dr. Deming, Taguchi, and Wheeler have criticized the past few decades.

Quality means more than meeting arbitrary targets set by some bureaucrat.

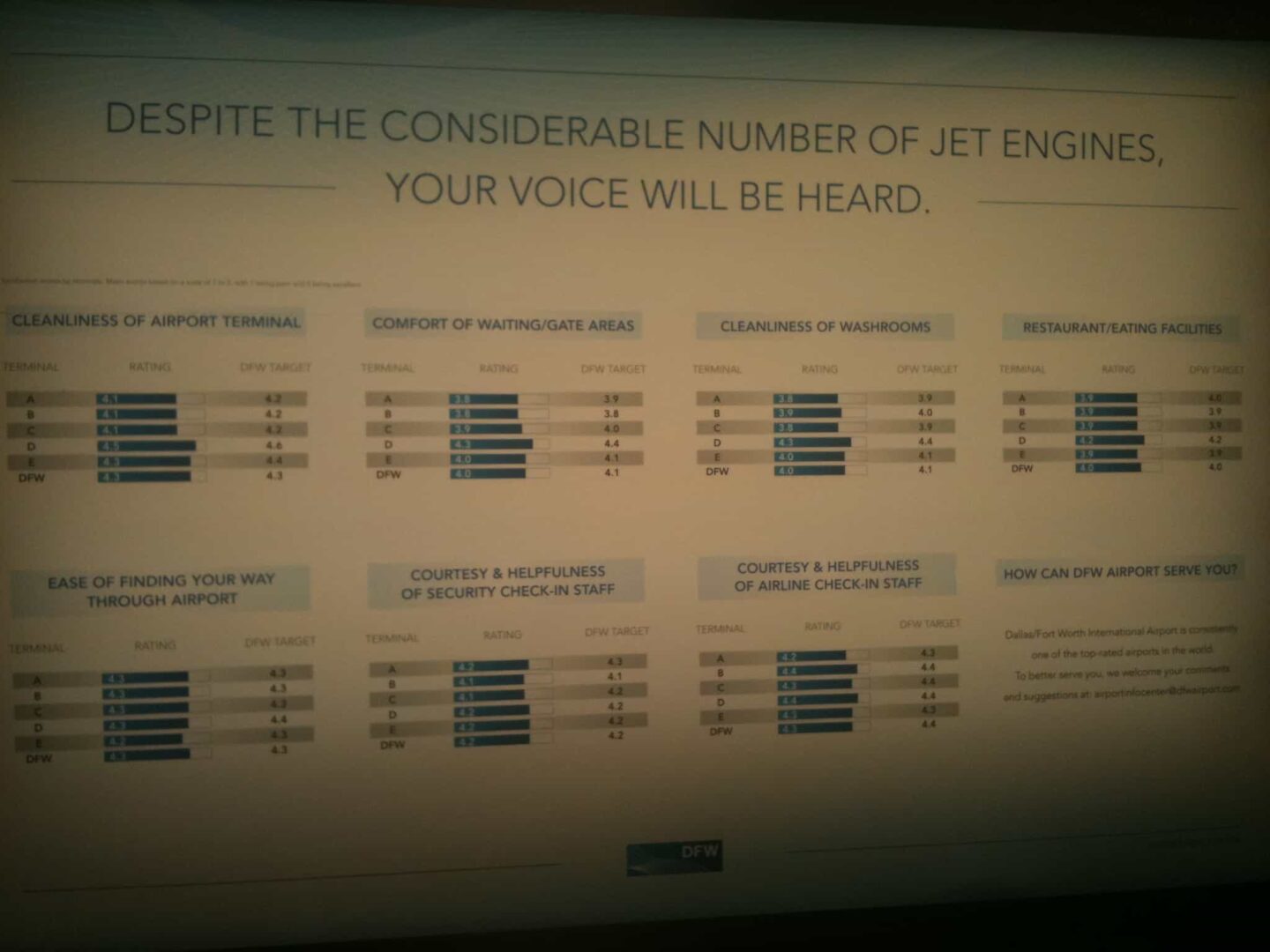

Here is the big sign that's on the wall in Terminal A at DFW. Click either picture for a larger view.

There are seven metrics tracked and posted for each of the five DFW terminals. What are we supposed to make of this? That they care about customer service and quality?

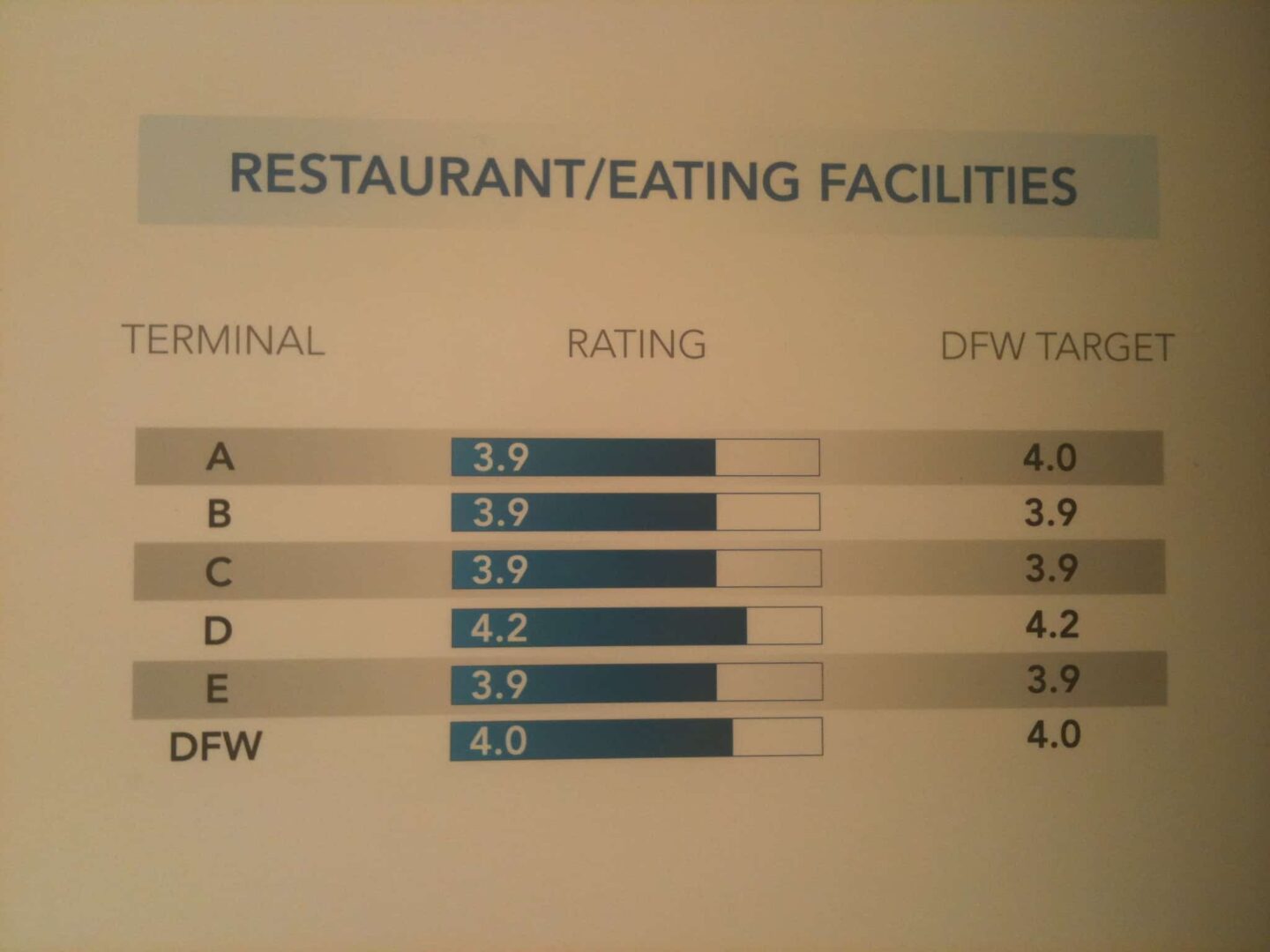

Let's look at one sign for closer inspection – the ratings for restaurant and eating facilities.

Who sets these arbitrary targets? Who decides that 4.0, or 3.9 to be obnoxiously precise, is good enough? Why do different terminals have different targets? Does Terminal D, with a high volume of international flights, have passengers with higher expectations?

These comparisons are pretty meaningless. Are we supposed to feel good that they are mostly hitting targets? If the target had been set to 4.5 or 3.5, how would we be expected to react? Also, is there anything meaningful here for managers to use in decision making? Probably not! There's probably a lot of back patting taking place because they are hitting targets.

Did the manager of Terminal A lose out on a bonus for missing their target by 0.1? Are the other terminals supposed to stop improving because they hit their target? Wouldn't it be more helpful to talk about WHAT they are doing instead of how they are measuring this? Why is the score low? What would make it higher? Is it a poor choice of restaurants (mostly fast food, except for Terminal D) or poor execution? High prices? I'd feel more assured that quality improvement was coming if they gave at least one cause or reason for the scores not being higher. That might demonstrate some understanding of the system.

As Donald Wheeler writes about in his outstanding book Understanding Variation: The Key to Managing Chaos, we need real process understanding. A single data point, or two data points, has little meaning. A comparison to target might not tell us anything about quality.

If we were going to rely on a measure like this, we would do better to see a run chart showing data or trends over time. A use of Statistical Process Control would be very useful here, as Wheeler teaches.

The SPC methods aren't that complicated to put into place, as I write about in my book, Lean Hospitals: Improving Quality, Patient Safety, and Employee Satisfaction. Instead of comparing patient satisfaction scores to an arbitrary target, track their performance over time in a run chart. Calculate upper and lower control limits that help you keep from over-reacting to every up and down point in the data. This method also helps you keep from over-reacting to every above-average or below-average data point in a stable system. You don't need a master's degree in statistics to do this.

I've helped departments, like hospital laboratories, use this method for looking at turnaround time trends over time – looking for “out of control” data points that indicate a “special cause” worth investigating. Instead of asking “are we hitting our targets?”, a better question is “is our process stable?” What quality would we expect to have next month or next quarter based on past performance? We can't tell from a simple display like this.

If you're new to SPC, maybe I haven't explained this well. Wheeler's book does a great job of teaching this approach, I highly recommend it. I think this whole approach to quality and management is far under-appreciated, even in Lean circles.

What do you think? What do you see in your organization?

What do you think? Please scroll down (or click) to post a comment. Or please share the post with your thoughts on LinkedIn – and follow me or connect with me there.

Did you like this post? Make sure you don't miss a post or podcast — Subscribe to get notified about posts via email daily or weekly.

Check out my latest book, The Mistakes That Make Us: Cultivating a Culture of Learning and Innovation:

I certainly don’t know why sharing the targets publicly makes any sense. Most of the food is usually franchised, so a terminal’s score is going to be an amalgamation of many different managers, which dilutes its meaning even more. And of course we don’t know what question they are asking to get this score either. I remember going through Chinese customs and there was an electronic poll taker for customer satisfaction in front of you. Am I really going to say “frowny face” (they were relative levels of smiley) while having someone judge my fit for passage? Yeah, right.

I’m not sure these are arbitrary. They seemly oddly specific. It could be long term trends, or benchmarking of similar food options from other airports, or whatever.

Of course, in the end, is DFW where most of us go for benchmarking? Not really. Not even among airports, where I would rank DFW near the bottom. And we all know what wonderful experiences we have in airports (note sarcasm).

.-= Jamie Flinchbaugh ´s last blog ..What NOT to Learn from the Undercover Boss =-.

I believe that metrics have 2 primary objectives: 1) to assess whether you are on track for perfomance and 2) drive transformation. It would appear they are on track to meet their low expectation of performance. But I wonder how this metric would cause any change in behavior toward improvement. This seems to be a reflection toward embracing mediocrity. It creates an environment where everything is okay and noe improvement is necessary. I would if they get any complaints. If so and they are serious about customer satisfaction they may want to revisit their metrics.

This reminds me of Cost of Quality metric. It was stated recently that our COQ metric is 8% of sales. What exactly am I suppose to gleen from this? Is it too high or too low? Do I spend more prevention or less? This metirc doesn’t meet either objective above. I think their is some benefits to the metrics but can’t be explained as a percent of sales. Thoughts?

This really hits home for me. I spent a few years in facility getting people to using trend charts and SPC to understand what the process is doing. We worked on getting shifts in the data through problem solving and had great success. Now I am with a different company and in the facility I am currently in, trends are rare. They have an arbitrary target to achieve. Take OEE for example…..50%. Well the 50% doesn’t relate to the production demands and there are no trend charts to understand how are we getting better from day-to-day or week-to-week. If the trend chart does exist, there is no emphasis on using it as a tool to improve.

Jamie stated “I certainly don’t know why sharing the targets publicly makes any sense.” As soon as I read the article and saw the picture it struck me as a PR ploy – it is mainly for the public. It is ambiguous for a reason…it does not really matter to the management of the eating establishments. What matters is to convince customers that they are being served in an acceptable manner. It also sounds a warning to me that if our company is really serious about improvements, we cannot allow ourselves to manipulate metrics to simply appear to be doing something when reality shows something different. You cannot fool all the people all of the time, even with bogus metrics to back you up.

I’d tend to agree the sign is more a P.R. tool than a management method. A cynic would ask if the goal was set knowing the typical average scores, so they could say “we are meeting target”, no problem here.

On a somewhat related note, I often see people confuse the expected result from a stable system (the “mean” in a control chart) with a target/goal. Just because you average 3 patient falls per month, your goal shouldn’t be 3, or even 2. The “target” should be zero in the sense that we should always work to improve the system because one patient fall might be one injury too many. “But zero isn’t achievable!” people will argue, and “not all falls hurt people” but is there any defensible goal for this, or employee injuries, that’s > 0?

I can live with the airport food goal not being 5.

Mark: I completely agree with your belief that there is no defensible reason for a goal >0 on healthcare/safety metrics such as patient falls. Ironically, Dr. John Toussaint, in his “Organizational Transformation Blog # 1: Purpose,” which you seem to recommend, praises a healthcare organization’s strategic purpose statement (ThedaCare’s?) that says: “Our strategy is to deliver measurably better value to our customers defined as 3.4 defects/million opportunities, no interruptions in customer flow i.e. waiting and/or workarounds, and lowest cost.” I similarly find the goal of 3.4 defects/million opportunities indefensible. What further troubles me is, how do you operationally define/measure the number of opportunities for “defects” during surgery; isn’t there one virtually every moment? And do they expect this goal to be meaningful or actionable? Do they really expect people to come to work each day at a hospital saying “Today I must deliver 3.4 defects/million opportunities”? [I attempted to comment about my concerns on the blog about a week ago, but I guess it was rejected for posting since at the time I am writing this the blog still shows a “No comments yet” message.]

I also found it interesting to compare some of the implied reproof in this thread about public posting of performance data, with Dr. Toussaint’s new “Organizational Transformation Blog # 3 Process:Transparency” in which he states: “Actual real time performance data reported in a public way drives improvement faster than any other action. We know because it’s happened at Thedacare and all across Wisconsin. As performance has been publicly reported resources have focused on fixing problems. [sic]”

Re your comment: “Did the manager of Terminal A lose out on a bonus for missing their target by 0.1?” Actually, although it’s done all the time, combining together and averaging metrics of that type (qualitative customer feedback survey ratings and the like) is technically wrong, making a 0.1 “difference” all the more meaningless. The measurement scale is what is known as an ordinal scale, meaning the numbers can be ordered (e.g., a rating of 4 is higher than a rating of 3), but they can’t rationally be added, subtracted, multiplied, or divided (thus they can’t be rationally averaged). There are lots of reasons for this prohibition, all essentially based on the subjectivity of the numbers. For instance, your idea of a 4 might be my idea of a 3, so it is not correct to say they form an average of 3.5. Also, the difference between a 1 and a 2 may not be the same size as the difference between a 3 and a 4, even though the gap on the scale is 1 for both. Furthermore, although we can say a 4 is a higher score than a 2, for example, there is no way of knowing that it is twice as good. So “averaging” a 4 rating with a 2 rating and calling it a 3, is mathematically irrational.

I, like you, am an avid believer in using statistical process control charts (which Don Wheeler prefers these days to call process behavior charts as a more accurate reflection of their power and use), especially the individual moving range charts Wheeler advocates. We like to say Lean is not about the tools, but this particular tool is a culture changer when understood and used properly, as it provides a new way of seeing variation that can by itself lead to permanent cognitive and behavioral change. But I have found a tremendous amount of resistance to using these charts among lean practitioners, especially in “production” processes. As an example, people who use work planning charts that show how much is planned to be produced in a given time segment, say an hour, versus actual production within that time segment, always seem to want a reason for any deviation between the two, even if it’s nothing more than common cause variation. Most importantly, I think Deming’s argument that you can’t meaningfully/effectively/efficiently improve a process that is being measured without first determining if it is relatively stable statistically (i.e., via SPC) is one of the most critical missing understandings of good process improvement amongst current practitioners. Every proponent of continuous improvement should know and practice this tenet.

Simon- thanks for your thoughtful comments. I do share your view that ZERO is the only goal. 3.4 defects per million is still 3.4 too many, especially when it comes to patient safety. That’s why I’ve never had much use for the Six Sigma definitions… it all depends on how you define an “opportunity” in DFMO.

I prefer the statement given by a hospital in the UK, that their goals were:

No Waste

No Waiting

Zero Harm

So, yes, I disagree with John on that point, but I still recommend what he has to say about lean and leadership.

I agree that “process behavior chart” is a better term.